Our website is made possible by displaying online advertisements to our visitors.

Please consider supporting us by disabling your ad blocker.

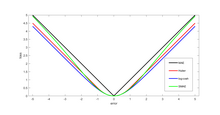

Loss function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function)[1] is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy.

In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century.[2] In the context of economics, for example, this is usually economic cost or regret. In classification, it is the penalty for an incorrect classification of an example. In actuarial science, it is used in an insurance context to model benefits paid over premiums, particularly since the works of Harald Cramér in the 1920s.[3] In optimal control, the loss is the penalty for failing to achieve a desired value. In financial risk management, the function is mapped to a monetary loss.

- ^ Hastie, Trevor; Tibshirani, Robert; Friedman, Jerome H. (2001). The Elements of Statistical Learning. Springer. p. 18. ISBN 0-387-95284-5.

- ^ Wald, A. (1950). Statistical Decision Functions. Wiley.

- ^ Cramér, H. (1930). On the mathematical theory of risk. Centraltryckeriet.

Previous Page Next Page