Our website is made possible by displaying online advertisements to our visitors.

Please consider supporting us by disabling your ad blocker.

Radiocarbon dating

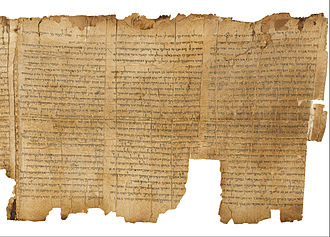

Radiocarbon dating (also referred to as carbon dating or carbon-14 dating) is a method for determining the age of an object containing organic material by using the properties of radiocarbon, a radioactive isotope of carbon.

The method was developed in the late 1940s at the University of Chicago by Willard Libby, based on the constant creation of radiocarbon (14

C) in the Earth's atmosphere by the interaction of cosmic rays with atmospheric nitrogen. The resulting 14

C combines with atmospheric oxygen to form radioactive carbon dioxide, which is incorporated into plants by photosynthesis; animals then acquire 14

C by eating the plants. When the animal or plant dies, it stops exchanging carbon with its environment, and thereafter the amount of 14

C it contains begins to decrease as the 14

C undergoes radioactive decay. Measuring the proportion of 14

C in a sample from a dead plant or animal, such as a piece of wood or a fragment of bone, provides information that can be used to calculate when the animal or plant died. The older a sample is, the less 14

C there is to be detected, and because the half-life of 14

C (the period of time after which half of a given sample will have decayed) is about 5,730 years, the oldest dates that can be reliably measured by this process date to approximately 50,000 years ago (in this interval about 99.8% of the 14

C will have decayed), although special preparation methods occasionally make an accurate analysis of older samples possible. In 1960, Libby received the Nobel Prize in Chemistry for his work.

Research has been ongoing since the 1960s to determine what the proportion of 14

C in the atmosphere has been over the past 50,000 years. The resulting data, in the form of a calibration curve, is now used to convert a given measurement of radiocarbon in a sample into an estimate of the sample's calendar age. Other corrections must be made to account for the proportion of 14

C in different types of organisms (fractionation), and the varying levels of 14

C throughout the biosphere (reservoir effects). Additional complications come from the burning of fossil fuels such as coal and oil, and from the above-ground nuclear tests performed in the 1950s and 1960s.

Because the time it takes to convert biological materials to fossil fuels is substantially longer than the time it takes for its 14

C to decay below detectable levels, fossil fuels contain almost no 14

C. As a result, beginning in the late 19th century, there was a noticeable drop in the proportion of 14

C in the atmosphere as the carbon dioxide generated from burning fossil fuels began to accumulate. Conversely, nuclear testing increased the amount of 14

C in the atmosphere, which reached a maximum in about 1965 of almost double the amount present in the atmosphere prior to nuclear testing.

Measurement of radiocarbon was originally done with beta-counting devices, which counted the amount of beta radiation emitted by decaying 14

C atoms in a sample. More recently, accelerator mass spectrometry has become the method of choice; it counts all the 14

C atoms in the sample and not just the few that happen to decay during the measurements; it can therefore be used with much smaller samples (as small as individual plant seeds), and gives results much more quickly. The development of radiocarbon dating has had a profound impact on archaeology. In addition to permitting more accurate dating within archaeological sites than previous methods, it allows comparison of dates of events across great distances. Histories of archaeology often refer to its impact as the "radiocarbon revolution". Radiocarbon dating has allowed key transitions in prehistory to be dated, such as the end of the last ice age, and the beginning of the Neolithic and Bronze Age in different regions.

Previous Page Next Page

Radiokoolstofdatering AF تأريخ بالكربون المشع Arabic Radiokarbon tarixləndirmə üsulu AZ Радыевугляроднае датаванне BE Радиовъглеродно датиране Bulgarian তেজস্ক্রিয় কার্বনভিত্তিক কালনিরূপণ Bengali/Bangla Amzeriatadur dre radiokarbon BR Datiranje ugljikom-14 BS Datació basada en el carboni 14 Catalan ھەژمێری ڕادیۆکاربۆن CKB