Back Entropie (Inligtingsteorie) AF اعتلاج (نظرية المعلومات) Arabic Entropie (Informationstheorie) BAR Ентропия на Шанън Bulgarian Entropija (teorija informacija) BS Entropia de Shannon Catalan ئانترۆپیی زانیاری CKB Informační entropie Czech Entropi gwybodaeth CY Entropi (informationsteori) Danish

This article needs additional citations for verification. (February 2019) |

| Information theory |

|---|

|

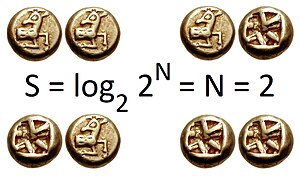

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable , which takes values in the set and is distributed according to , the entropy is where denotes the sum over the variable's possible values.[Note 1] The choice of base for , the logarithm, varies for different applications. Base 2 gives the unit of bits (or "shannons"), while base e gives "natural units" nat, and base 10 gives units of "dits", "bans", or "hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable.[1]

The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",[2][3] and is also referred to as Shannon entropy. Shannon's theory defines a data communication system composed of three elements: a source of data, a communication channel, and a receiver. The "fundamental problem of communication" – as expressed by Shannon – is for the receiver to be able to identify what data was generated by the source, based on the signal it receives through the channel.[2][3] Shannon considered various ways to encode, compress, and transmit messages from a data source, and proved in his source coding theorem that the entropy represents an absolute mathematical limit on how well data from the source can be losslessly compressed onto a perfectly noiseless channel. Shannon strengthened this result considerably for noisy channels in his noisy-channel coding theorem.

Entropy in information theory is directly analogous to the entropy in statistical thermodynamics. The analogy results when the values of the random variable designate energies of microstates, so Gibbs's formula for the entropy is formally identical to Shannon's formula. Entropy has relevance to other areas of mathematics such as combinatorics and machine learning. The definition can be derived from a set of axioms establishing that entropy should be a measure of how informative the average outcome of a variable is. For a continuous random variable, differential entropy is analogous to entropy. The definition generalizes the above.

Cite error: There are <ref group=Note> tags on this page, but the references will not show without a {{reflist|group=Note}} template (see the help page).

- ^ Pathria, R. K.; Beale, Paul (2011). Statistical Mechanics (Third ed.). Academic Press. p. 51. ISBN 978-0123821881.

- ^ a b Shannon, Claude E. (July 1948). "A Mathematical Theory of Communication". Bell System Technical Journal. 27 (3): 379–423. doi:10.1002/j.1538-7305.1948.tb01338.x. hdl:10338.dmlcz/101429. (PDF, archived from here Archived 20 June 2014 at the Wayback Machine)

- ^ a b Shannon, Claude E. (October 1948). "A Mathematical Theory of Communication". Bell System Technical Journal. 27 (4): 623–656. doi:10.1002/j.1538-7305.1948.tb00917.x. hdl:11858/00-001M-0000-002C-4317-B. (PDF, archived from here Archived 10 May 2013 at the Wayback Machine)

![{\displaystyle p\colon {\mathcal {X}}\to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/937cff631c9e7bd8e359d598557d62ef8910597b)

![{\displaystyle \mathbb {E} [-\log p(X)]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/392d6bb368ca2b76ced69928ed3338cfab7e172d)