Back معلومات متبادلة Arabic Transinformation BAR Vzájemná informace Czech Transinformation German Αμοιβαία πληροφορία Greek Información mutua Spanish Elkarrekiko informazio EU اطلاعات متقابل FA Information mutuelle French אינפורמציה הדדית HE

| Information theory |

|---|

|

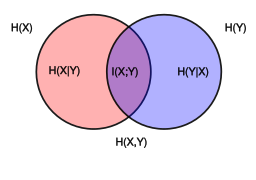

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the "amount of information" (in units such as shannons (bits), nats or hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable.

Not limited to real-valued random variables and linear dependence like the correlation coefficient, MI is more general and determines how different the joint distribution of the pair is from the product of the marginal distributions of and . MI is the expected value of the pointwise mutual information (PMI).

The quantity was defined and analyzed by Claude Shannon in his landmark paper "A Mathematical Theory of Communication", although he did not call it "mutual information". This term was coined later by Robert Fano.[2] Mutual Information is also known as information gain.

- ^ Cover, Thomas M.; Thomas, Joy A. (2005). Elements of information theory (PDF). John Wiley & Sons, Ltd. pp. 13–55. ISBN 9780471748823.

- ^ Kreer, J. G. (1957). "A question of terminology". IRE Transactions on Information Theory. 3 (3): 208. doi:10.1109/TIT.1957.1057418.